by Horațiu Dan

As each Large Language Model (LLM) has a training cut-off-date, their accuracy is highly impacted when real-time or future data is requested. This phenomenon is observed even in cases when users write thoroughly engineered prompts because the answers are generated from items predicted based on a static knowledge foundation. Such a situation is not always acceptable. To overcome this, AI assistants (chatbots) are now being enhanced with Internet access, which allows them to articulate more relevant and up-to-date “opinions”.

In the case of Anthropic, as of April 2025, web search has been made available to all Claude AI paid plans. This is definitely a step forward, as the pieces of information received by users can now be decorated with additional “contemporary” details and thus, their accuracy increased.

The key contributor here is without any doubt the Model Context Protocol. MCP was created and developed by Anthropic with the clear intent to standardize how context can be brought into AI applications from additional external data sources.

This article aims to first provide a few insights regarding MCP and its architecture. Then, it exemplifies how Claude Desktop can leverage it to gain access to real-time web search only via configuration and dedicated MCP servers plug-in. Also, it analyzes a real use-case which allows a user to obtain the fixtures of some rugby matches that are scheduled in the upcoming weekend, then save these in a text file on the local machine. Although by now most of the AI assistants (chatbots) have already been enhanced with web search functionalities, the purpose of this experiment is to ease the understanding of how MCP is able to make this possible.

Since no code is written, the target audience is both technical and non-technical.

Model Context Protocol (MCP)

General Aspects

According to its creator, Anthropic, MCP is an open protocol that standardizes how AI applications connect and work with addional data sources. The purpose is to enrich the context of these LLMs so that their responses are more precise. To make an analogy, as REST APIs allow web applications to communicate with backends, MCP defines how AI applications interact with external systems.

By leveraging the protocol and building MCP compatible applications, the concerns are clearly separated and the responsibility is moved to other applications, to MCP servers. These are focused, granular and oriented on a particular kind of data. An MCP server can be seen as a reusable wrapper or gateway over an API that directly calls it whenever needed by just using the natural language.

On the other hand, MCP standardizes the AI development in general – for AI application developers (that connect their applications to any MCP server directly), for API developers (that can build an MCP server once and use it in multiple places) and for AI application users and enterprises.

The MCP ecosystem is pretty wide and is expected to grow at an accelerated pace as it contains a variety of products – AI applications, AI agents, servers – developed both by open-source contributors and enterprises. Currently, there are a bunch of SDKs across multiple programming languages that allow developing a great variety of MCP servers, either privately or open source. Thus, the future is promising in this direction.

Architecture

MCP is built on a client-server architecture. Since this is an introductory article, it will not dive into a great deal of details. From a very high level point of view, the MCP architecture can be depicted as the picture below.

A host has one or many MCP clients where each establishes a one-to-one connection to a specific MCP server and communicates via messages defined by the MCP protocol itself. An example of such a host is Claude Desktop, the one used later in this article. As already mentioned, an MCP server is a lightweight application that exposes specific data through the protocol via:

- Tools – enables the execution of functions, API calls and actions in external systems (e.g. Web search, used later in this article)

- Resources – provides read-only access to files, databases, web APIs

- Prompt Templates – offers context-specific instructions and pretty much removes the prompt engineering task from the user

Briefly, the workflow is the following:

- An user sends a query to the host (AI assistant)

- The MCP client identifies what external resources/tools are needed and communicates with the appropriate MCP server

- The MCP server interacts with external systems (databases, APIs, file systems)

- The results flow back through the MCP server to the MCP client

- The AI assistant processes the data and the host responds to the user

The communication between the MCP client and the MCP server is first initialized, then an exchange of messages (requests, response, notifications) is carried out, ultimately, the connection is terminated.

One last consideration concerning the architecture is regarding the MCP transport type that handles the actual exchange of messages between the client and the server. Depending on the server type, there are several possibilities. Local servers use standard input and output (stdio), while the remote ones use either HTTP and Server Sent Events (HTTP+SSE), that keeps a stateful connection or Streamable HTTP, which allows both stateful and stateless connections.

Although MCP is brand new, a lot of resources describing it are already available and ready to be researched as in order to start being creative, a thorough understanding is recommended first.

To get a first glance, a concrete use case is described next, one decorated with quite a bunch of details. In case some steps are obvious, one could jump forward. Nevertheless, the results obtained are quite interesting and satisfactory.

Use-Case

The Initial LLM Interaction

Let’s assume the user is interested in rugby in general and particularly in finding out what matches are scheduled this upcoming weekend in the Super Rugby Pacific competition, where the best clubs from the southern hemisphere are playing.

Since Claude Desktop will be used, the first precondition is to install it locally (check [Resource 1] for guidance). For the purpose of the experiment described in this article, signing up to a Claude AI free plan is enough. Once the application is started, it is possible to begin interacting with the available LLMs. Let’s select the default model – Claude Sonnet 3.7 (although the newer Claude Sonet 4.0 is already available as well).

In order for the interaction to be as efficiently and pleasantly as possible, normally one should leverage a very important skill, which I want to believe we all acquired during the elementary school and have improved it afterwards – the ability to clearly formulate a question on a topic of interest and to write it down grammatically correct.

LLMs do a pretty good job even if users make typos or express ambiguously. Humans on the other hand, have a conscience and they shall strive to respect both themselves and the others. Thus, the better we formulate instructions and requests, the better these LLMs evolve and the better they respond.

Our starting prompt is passed to Claude Desktop – What is the schedule and the kickoff times of the matches in the ‘Super Rugby Pacific’ competition for the upcoming Friday, Saturday and Sunday?

The answer though is of no use, as the model was asked for a piece of information that chronologically is after its training cut-off-date – October 2024. On the other hand, the client that runs locally has no access to real-time data, events or to other local resources and thus, it cannot help either in this scenario.

In the brief introduction above, it was mentioned that an AI client can be enriched with context via MCP by connecting it to an existing MCP server, which actually delivers insights form a particular data source.

[Resource 2] provides a wide collection of available and ready-to-use MCP servers. In order to resolve the problem faced here, Brave Search MCP server [Resource 3] is plugged into Claude Desktop via configuration and consequently the client is added the real-time Web search functionality through the use of the Brave API.

Plugging Brave Search MCP Server into Claude Desktop

To be able to use the Brave Search API via the Brave Search Server, one must first sign up and generate an API key. This can be accomplished by accessing [Resource 4] and following the straight-forward steps described. The free subscription is limited to 1 request per second and offers a maximum of 2000 requests per month, which is again enough for experimentation purposes. Nevertheless, a credit card is needed.

Once the API key is available, it can be used in Claude Desktop configuration set-up the MCP server (see [Resource 3]).

{

"mcpServers": {

"brave-search": {

"command": "npx",

"args": [

"-y",

"@modelcontextprotocol/server-brave-search"

],

"env": {

"BRAVE_API_KEY": "the-api-key"

}

}

}

}

In Claude Desktop, go to File – Settings. Select Developer, then click Edit Config. Once the button is pressed, the user is indicated a JSON file.

The above snippet shall be written to. In my case, this is

C:\Users\horatiu.dan\AppData\Roaming\Claude\claude_desktop_config.json,

as I use a Windows machine.

If such a file does not exist, create it first, then add the

snippet. If the file exists, add the brave-search MCP server to its

place, as part of the mcpServers attribute. If not

sure how a JSON file looks like, try to briefly understand it

first, then make sure the content of the file is syntactically

correct.

Before being able to use the brave-search MCP server inside

Claude Desktop, there is one more prerequisite – the server needs

to be run. In this experiment Node.js is used and

consequently, it must be installed and made available in the local

path.

The simplest way to install and make it available is using the

Node Version Manager (nvm).

- Download

nvm-setup.exe, preferably the latest one. See [Resource 5] for Windows machines. - Install Node Version Manager (

nvm)

C:\Users\horatiu.dan> nvm-setup.exe

An NVM_HOME system variable is created towards the

nvm installation. Make sure it is appended to the

Path system variable.

- Install Node Package Manger (

npm) andNode.js

C:\Users\horatiu.dan> nvm install latest

Once installed, inside the nvm installation

directory, a folder containing node is created. Make sure the

content of this folder is added to the Path system

variable as well.

- To check the installations, issue the commands below.

C:\Users\horatiu.dan>nvm -v

1.2.2

C:\Users\horatiu.dan>npm -v

11.3.0

C:\Users\horatiu.dan>node -v

v24.0.2

If all steps have been accomplished as advised, after restarting Claude Desktop, brave-search MCP server should be available and ready to be used.

The Insightful LLM Interaction

This experiment has been done on Thursday, the 22nd of May 2025. The detail is important, as the accuracy of the LLM responses may be checked to see if in accordance with the actual fixtures and kickoff times.

Once the previous set-up is fulfilled, the question may be asked again. As the pieces of information requested are from the near future, Claude client now uses the brave-search MCP server integration to find the useful details. Whenever the tool considers it should use the server, it asks for permission. If allowed, it continues towards compiling the response.

Depending on the intermediary found results and the conclusions inferred, the model may perform several web searches via the Brave API as it considers fit.

Here, it performs 3 with the following actual requests:

{ query: Super Rugby Pacific schedule fixtures kickoff times May 24 25 26 2025 }{ query: Super Rugby Pacific Round 14 matches May 23 24 25 2025 kickoff times }{ query: Super Rugby Pacific Round 14 May 23 24 25 2025 schedule fixtures crusaders highlanders }

Step-by-step, the response is created, as depicted below, which luckily for the user it is pretty accurate, as we will see further.

If we confront it with the pieces of information available in the official web site of the competition [Resource 6], Claude together with the MCP brave-search MCP server seemed quite helpful.

To go one step further, the model offered to convert the starting times to another time zone. Why not? Let’s see them at Romanian time zone, the one the browser used when the official web site was visited (I am based in Romania).

Success! We have the fixtures for the coming weekend.

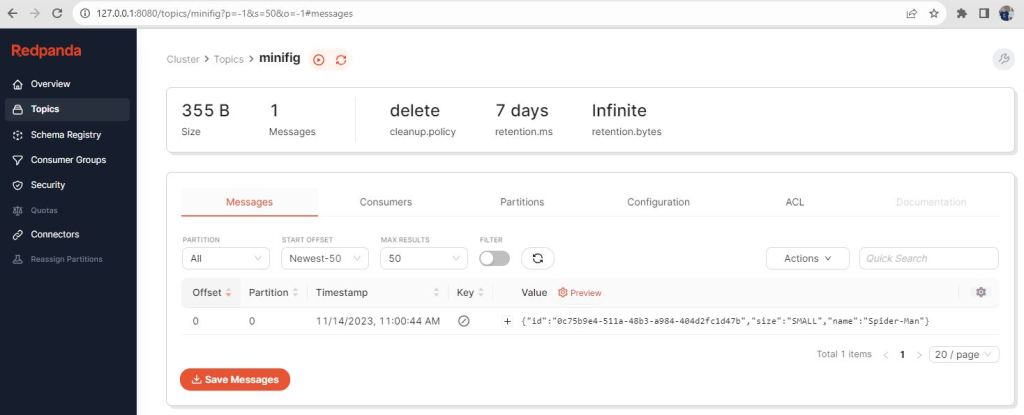

Finalizing the Report Also via MCP

The last aimed step of this experiment is to have the report with the schedule written in a file locally to the disk. In order to be able to accomplish this, the Filesystem MCP server [Resource 7] is plugged into Claude Desktop similarly to the brave-search one. As the name specifies, this server exposes tools that allow performing operations on the local file system, thus it needs to be used cautiously.

The previously

C:\Users\horatiu.dan\AppData\Roaming\Claude\claude_desktop_config.json.

configuration file is edited as below.

{

"mcpServers": {

"brave-search": {

"command": "npx",

"args": [

"-y",

"@modelcontextprotocol/server-brave-search"

],

"env": {

"BRAVE_API_KEY": "the-api-key"

}

},

"filesystem": {

"command": "npx",

"args": [

"-y",

"@modelcontextprotocol/server-filesystem",

"C:/temp"

]

}

}

}

The filesystem MCP server is also run with Node.js and allowed

to do operations only in directory found at

C:/temp.

Once Claude Desktop is restarted, the newly added server appears as available and ready to be used.

The experiment is completed with the following last request to Claude – to save the fixtures in a file, locally on the disk.

First, the filesystem MCP server attempts to write the file but it receives the following response:

Error: Access denied - path outside allowed directories:

C:\Users\horatiu.dan\AppData\Local\AnthropicClaude\app-0.9.3\super_rugby_fixtures.txt

not in C:\temp

In the server configuration, in the

claude_desktop_config.json file, the only allowed

directory is C:\temp and thus, the first response

makes real sense.

Secondly, the filesystem MCP server lists the allowed directories and lastly, it writes the report. This time the response received is

Successfully wrote to

C:\temp\super_rugby_fixtures.txt

The content of the file:

SUPER RUGBY PACIFIC FIXTURES

May 23-24, 2025 (Romanian Time)

FRIDAY, MAY 23, 2025

10:05 AM - Crusaders vs. Highlanders

12:35 PM - Reds vs. Hurricanes

SATURDAY, MAY 24, 2025

10:05 AM - Chiefs vs. Moana Pasifika

12:35 PM - Force vs. Waratahs

Note: All times are in Romanian local time (EEST/UTC+3)

And with that, the experiment is successfully completed. We managed to work with the LLM to retrieve the pieces of information of interest, delivered in accordance to our intent.

Conclusions

The use-case in this article is a good example of how AI assistants (chatbots) can leverage the MCP protocol to widen their knowledge base with additional context that is received “at runtime” from various data sources.

Although most of these assistants nowadays support the web search functionality, the purpose here was to introduce the MCP and exemplify how multiple MCP servers (two) may be used together to accomplish a specific scenario. No new code was written and still the available tools were plugged-in and fit nicely. The stage has now been set, in a future article, a dedicated MCP server will be developed and used to provide even more insightful pieces of information related to rugby, as it is truly a great sport.

To sum-up, MCP essentially acts as a universal adapter that allows AI assistants to securely access and interact with external systems while maintaining a consistent interface. With MCP, AI development is not fragmented anymore.

Resources

[1] – Claude Desktop is available here

[2] – MCP Servers Repository

[3] – Brave Search MCP Server

[4] – Brave Search API

[5] – NVM

[6] – Super Rugby Pacific

[7] – Filesystem MCP Server

[8] – The picture is from the official library of Rugby World Cup 2023 France

[9] – Anthropic – Developer Guide – MCP